Question What You Read

The issue with over-simplifying and sensationalizing science - a discussion on microplastics research methodologies

Each week, we ingest about a credit card worth of microplastics. Or at least, that’s a headline that has been circulating around since last year, at places like the WWF, CNN (assuming there are still people who watch CNN), and the BBC. Yet after more closely looking into this claim, I do not think that ingesting those kinds of quantities is very likely, unless you work in a plastics or textiles factory or perhaps some kind of waste processing facility. Having first assumed these findings to be accurate myself until I dove into the research about micro- and nanoplastics (MNPs) for this newsletter, I felt it was important to also spend some time talking about the ways in which scientific studies are reported and discussed in media.

Unfortunately, overly simplified or sensationalist reporting can distort people’s understanding of how science works (or is supposed to work, at least), and make it harder to understand the world around us and solve the issues the scientists were hoping to clarify. This article is by no means meant to be an exhaustive analysis of how scientific discoveries are communicated, but what we discuss can hopefully serve as a starting-off point to help you make sense of the complex world of research. The goal of this newsletter has always been to improve understanding of complex topics and provide you with the knowledge and tools to make sense of the world around you. For my part, I believe that that should be the goal of any reporting, but as has been extensively documented both here and elsewhere, ideological, political, and economic imperatives all too often trump the pursuit of the truth that people in this profession should be striving for.

In this second article of a series of posts on microplastics published on this newsletter, we are going to take a look at two common issues with reporting on scientific research that can contribute to the spread of inaccurate information and misinterpretations of scientific findings. In the previous post on microplastics we looked at what micro/nanoplastics (MNPs) are, where they are coming from, and what they are made of. Check it out here in case you missed it.

💡In this series of posts you can expect to learn more about the following aspects of plastic pollution:

Looking beyond the headlines – avoiding sensationalism in reporting on scientific research

Health risks of microplastics

Environmental consequences of microplastics

What can we do to address plastic pollution?

Issue 1 – Not realizing or mentioning that assumptions and methodologies affect research outcomes

So where do claims like the ‘ingesting one credit card a week’ come from? In this case, this claim is based on the results of a study that looked at human exposure to microplastics. However, what is left out of discussions around this admittedly catchy claim, is the wider context of how the researchers arrived at their conclusion. As is the case with many studies and complicated models, especially in fields like environmental sciences that often study complex interconnected systems, certain assumptions need to be made about what data to use and how to interpret the results. Since these assumptions and interpretations can affect the outcome of a study, it is important to be aware of exactly how researchers arrived at their conclusions. Something that any reliable reporting on these kinds of studies should mention, especially when generalizations are made based on their results.

Some examples:

A study had only a small number of subjects and used the results to draw conclusions about a larger population

A study examined parts of an organ and used the results to draw conclusions about the organ or body as a whole

As study combined results from different studies even though they used different research methods or equipment

Let’s take a look at the assumptions that were made for the study mentioned in the introduction. The study in question, commissioned by the WWF, compiled the results of a variety of other studies to try and estimate how many microplastics we ingest. However, because the different studies used different detection methods or looked for MNP in different places, the researchers made certain assumptions and used extrapolations to be able to combine the data.

In order to estimate how many and what kind of microplastics particles get ingested, the researchers combined studies that measured microplastics mass and quantities in different mediums like ocean water, beer, salt, and shellfish. They made assumptions about how much of these mediums we ingest, for instance by assuming that we consume about 4 liters of bottled (so not ocean!) water and about 73 grams of salt a week.

Moreover, the studies used to estimate things like average microplastics mass and quantities had different detection limits, meaning that some were able to detect smaller particles whereas other could not. It seems reasonable to assume that if a certain detection method can detect smaller particles, that your measured quantity will be much higher and your average particle size or mass much smaller. Therefore, I would find a study that combined different quantities, mass etc. from studies with the same detection methods much more compelling.

Long story short, all these different assumptions have an effect on the numbers chosen or calculated and therefore on the results you get when you use said numbers to make models and do further calculations.

The main question is what happens when we look at studies that do use methods with similar detection limits and where, instead of assuming all particles to be of a certain mass, size, or shape, the size distribution of different particles is taken into account. Well, the mass of microplastics we ingest suddenly becomes much smaller. For example, a study by researchers at Wageningen University that did just that estimated the mean microplastics intake for an adult to be 5.83 * 10^-7 milligrammes per day (mg/day), as opposed to the 714 mg/day proposed by the WWF study, an estimation over a million times lower.

Not that I am arguing that we should now take the Wageningen numbers as undeniable truth, although their methods seem more accurate and their results are much more in line with what other studies are finding. The truth of the matter is that it remains extremely difficult to accurately estimate how many MNPs we actually ingest. Detection methods and accuracy vary across different studies, for example when it comes to what size particles can be detected (as we saw earlier), but also because there are so many different types and sources of plastics (as we discussed in the previous post on this topic). Moreover, how much you are actually exposed to can vary a lot based on where and how you live. And then we haven’t even talked about how your biology and lifestyle can affect how much of an effect they have on your health. We’ll take a closer look at these aspects in upcoming articles in this series.

Through this example you have seen an illustration of how important research methodologies can be, and therefore how essential it is to include information about them in science reporting. It is a compelling argument for ensuring that media reporting on science is done by competent, honest people who have sufficient understanding of the scientific method to avoid creating or furthering misconceptions about the accuracy or reliability of studies. Researchers’ assumptions and methods can make the difference between accurate, replicable results that stand the test of time and misleading results that can send an entire field astray for years.

Issue 2 – Condensing complex science and nuanced results into attention-grabbing headlines

The other reason I started with examples of media reporting on ingesting credit cards, is because I have seen this kind of thing happen a lot over the years. For example, I remember someone once scolding me for my consumption of cheese because they claimed cheese is as addictive as crack cocaine. A quick internet search indeed revealed many headlines and articles containing this claim or some variation of it. Yet when I actually read the study that was the source of these claims, this claim was nowhere to be found.

I can give you another example using an article I wrote in 2022 on the effects of increasing droughts on agriculture in Europe. I could easily have spread the truthful claim that European crop losses due to droughts have tripled between 1991 and 2005. Yup. You read that right. Tripled! I might have written if I worked at CNN. Yet what I would have left out was that over the same period total yields have risen by 146% because of innovations in agricultural methods. In other words, these gains more than offset the 3-9% of drought-related crop losses. Of course, it is still a worrying trend, and one that should be slowed down or reversed as much as possible, but with this wider context we suddenly have a wholly different perspective on the issue.

Often these misinterpretations start with some mundane research paper condensed into an exciting headline, perhaps innocently devised as an engaging hook to a nuanced interview with the researchers. Or perhaps a reporter is looking for a way to make the often mundane processes of scientific inquiry more exciting so that they can successfully pitch it to their editor or get people to actually pay attention to their work. Or perhaps the paper in question only looked at a specific aspect of a larger issue, and its results are consequently reported without the context necessary to avoid misinforming the general public. Subtlety, nuance, and uncertainty have a curious way of getting lost in our ad-driven, attention-hungry media space.

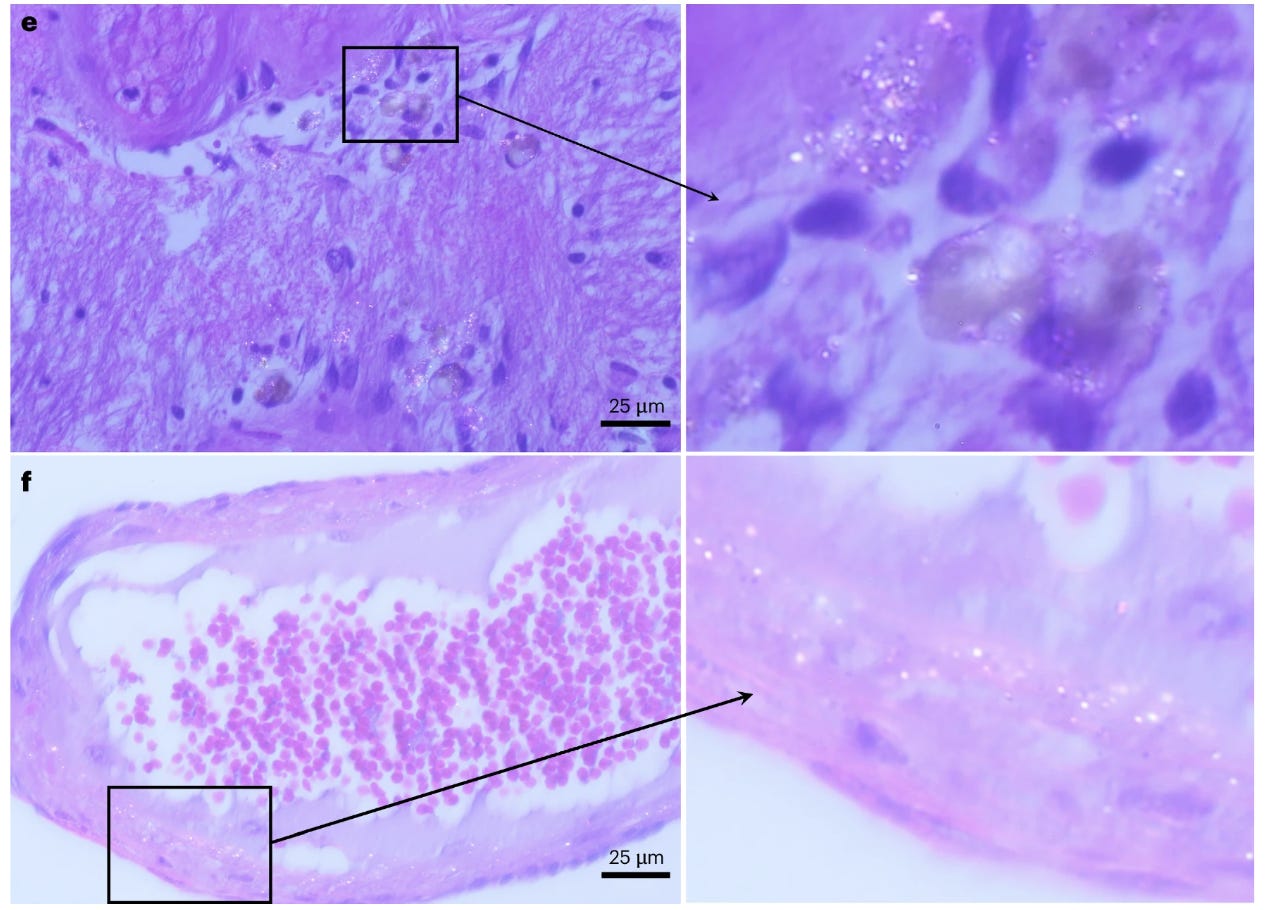

Also, let us not forget that not only reporters benefit from sensationalism. Getting attention for your research can be a great way of getting your next project funded, so there is an incentive on all sides to make even the most unassuming studies seem groundbreaking. I am reminded of this recent study on MNP concentrations in the human brain. The researchers used brain tissue samples of 40 brains to look for MNP particles, and concluded that there was a trend of increasing concentrations in the brain and liver.

In an interview, the co-lead study author talked about how the study’s results suggested that we have a plastic spoon worth of plastic inside our brains. When I used the study concentrations and multiplied those for the average weight of a human brain, I did arrive at about the weight of a plastic spoon, so his estimation is presumably based on the assumption that the concentrations they found in their samples apply to the brain as a whole. As he acknowledged, though, “current methods of measuring plastics may have over- or underestimated their levels in the body,” which I would argue makes using sample concentrations to make assumptions about the organ as a whole quite problematic. Basically, based on what I have read I have a bunch of questions that make me doubt the veracity of a claim like ‘we have a plastic spoon worth of plastic in our brains’:

How accurate are these methods for estimating/measuring MNP concentrations? To what degree do these sample concentrations tell us something about the brain as a whole? And how much do these 40 brains, originating from U.S. East Coast repositories, represent the population as a whole? For this we would need to know more about the people these brains came from, so their lifetime exposure to plastics might be estimated. Some of these questions and uncertainties are also mentioned in the study’s discussion. For example, the researchers acknowledge that the analytical methods are not yet standardized and that some particles may have been under- or overcounted.

I am not necessarily criticizing the study itself, seeing as the goal of the study was to study the presence of MNP in the brain and liver, to learn more about the particles’ properties and to determine whether there was a visible trend over time. Instead, I mention this example because as long as the answers remain so uncertain as I would argue they are based on the current state of MNP research, I think we should avoid over-sensationalizing or generalizing research findings and stick to what we do know, namely that we are finding tiny plastic particles all over the body, and that these concentrations appear to be rising, which is concerning in and of itself.

Conclusion

As we discussed, how much plastic we unknowingly ingest remains up for debate, and comparing different studies is a challenge due to the wide variety of detection and research methods, with each their own shortcomings, inaccuracies, and potential for interference from other factors.

The good news is that, in the case of MNP research, methodologies are increasingly being standardized, which should make it easier to compare the findings of different studies and thereby make more accurate estimations of the scale of the problem. The coming decade we are hopefully going to get some more concrete answers to questions like how much are we ingesting, how much of that is actually harmful, and exactly how harmful. But we are not there yet, so it seems prudent to take bold claims about ingesting credit cards or having the equivalent of a plastic spoon inside our brains with a grain of salt, or rather a microplastic particle the size of a grain of salt.

Of course, the best way to avoid being misinformed is to actually read the studies mentioned in news reports or in headlines, to check their methodologies and their data and make sure that these studies actually proved anything like what is being claimed in news reports. But we cannot all be experts or be expected to know all the ins and outs of different scientific fields of study. That is why, first of all, we should check if studies have been peer-reviewed, meaning that other researchers, usually in the same field, have evaluated the results. But perhaps most crucially, we should not place our trust in outlets or people who are more concerned with advancing economic interests or their career than with reporting the truth, or who are not knowledgeable or capable enough to give you an accurate representation of the actual science. Obviously, everyone makes mistakes and no one can know about everything, and I would be the last person to tell you to ‘only trust the experts’, but what I’m saying is that it is important to make sure that the people you decide to listen to actually have some idea what they’re talking about, are transparent about where they got their information and can explain how they arrived at their conclusions.

The clear and increasing potential for widespread harm to our health and to our environment are more than enough reason to reduce the production of plastics as much as we can and minimize our exposure and their potential to spread to the environment where we cannot. Sensationalizing science might seem like an effective way to draw attention to important issues, and although I would never do it, perhaps in some cases it is more effective, like when a movie exaggerates or simplifies scientific advances for dramatic effect, but more often than not these claims erode trust in the scientific method and end up undermining the very causes they are meant to advance.

Great piece! It's always good to go back to the source material and see exactly what the experts are saying, but who has the time and inclination to do so anymore? That's why so many "myths" get spread as truth. But we really need to stop manufacturing plastics and clean them out of our environment or it won't make a difference whether or not we have a lot or a little inside our bodies. We will have killed off every fish, bird and sea mammal in the oceans and many inland.